This article is about the of stability of neural networks output when dealing with the Image Super Resolution problem, i.e. that of recovering the original high-resolution image by a corrupted low-resolution version of it. This was the topic of the master’s thesis of Alessandro Alberti, Stability theory of Neural Networks for inverse problem with an application to Image Super Resolution, with supervisors prof. Marco Romito (University of Pisa) and dr. Nevio Dubbini (Miningful).

Following V. Anthun, M. Colbrook and A. Hansen [2], which proved the existence of a neural network for inverse problems with theoretically ensured stability properties, we adapted and developed a deep learning approach to the Image Super Resolution problem.

Image Super Resolution problem is modeled through linear inverse problems between finite dimensional vector spaces, i.e. the problem of recovering a vector x from a measurement y = Ax + e, where A is a linear sub-sampling operator and e is Gaussian white noise. These operators map vectors from a higher to a lower dimensional space, modeling the fact that the measurement y \in \mathbb{R}^m contains less information than the original signal x \in \mathbb{R}^N (N>m). A typical way to recover the signal x is reformulating the problem in terms of the following optimization problem:

argmin_{x\in \mathbb{R}^N}\,\,\, \lambda\ \|x\|_{l^1} + \|Ax-y\|_{l^2}Inverse problems can be interpreted, from a physical point of view, as evaluating a quantity by the measurement of another quantity directly accessible to the experiment, called measurement. With this reference, it is clear how a key property for a solution is ensuring that errors or noises in the measurements do not significantly impact the quality of the reconstruction. Thus, the stability issue considered here is the stability with respect to small perturbation of the input.

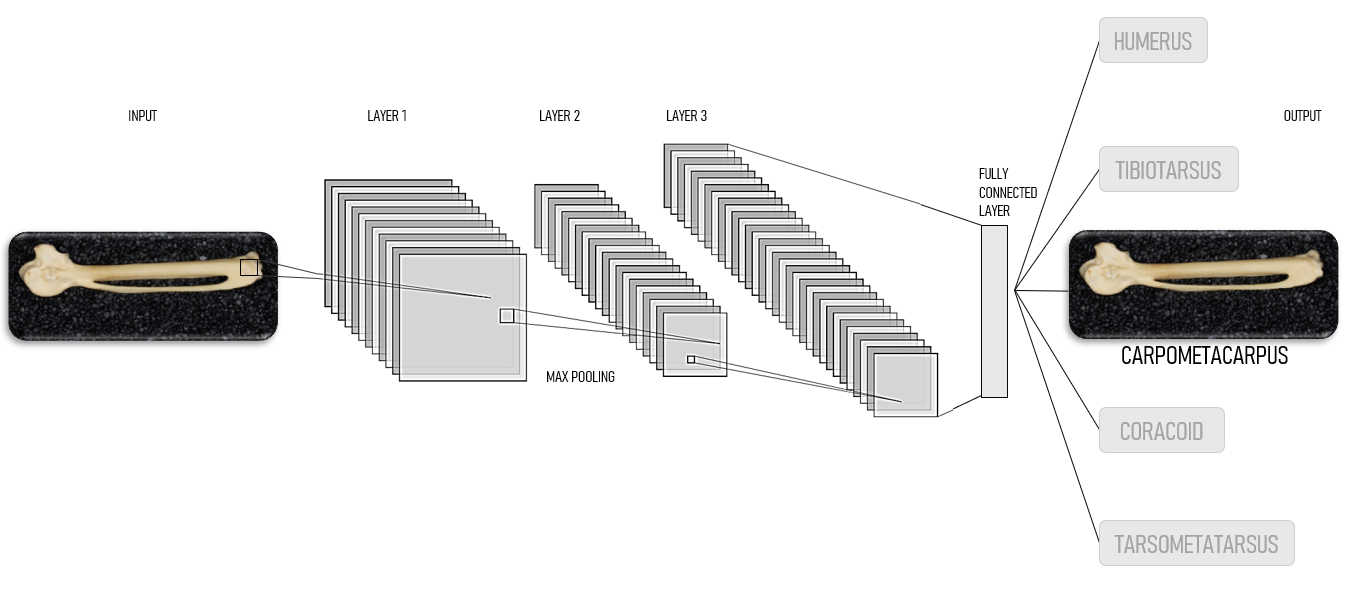

Overfitting is closely connected to the instability of a neural network for this kind of problems. Therefore, the stability guarantees are obtained by imposing some conditions on the developed algorithm in order to prevent overfitting in the solution. The resulting model is called FIRENET (Fast Iterative REstarted NETwork, as in [2]). The theoretical analysis shows how classical deep learning training procedure typically leads to unstable methods. Thus, FIRENET has a feed-forward neural network structure but does not require a training procedure.

When applying this neural network to the Image Super Resolution problem we first reformulated the problem in terms of a linear inverse problem. To do this we assume that the low-resolution input image is obtained by the action of a degradation operator on the target high-resolution image. This operator adds a blurring effect on the image and then extracts only a subset of its pixels. During the numerical experiment, we artificially constructed the low-resolution images from high-resolution images in order to compare the ground truth and the image reconstructed by FIRENET.

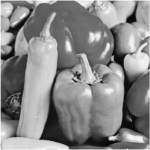

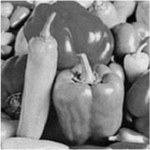

In the experimental analysis we compared the performance of FIRENET with a simple reconstruction model known as bicubic interpolation. In all these experiments the number of pixels of the low-resolution image was chosen to be 16 times smaller with respect to the relative high-resolution one. Moreover, for the experiments we considered grayscale images, which are represented by real-valued matrices. The problem and the models can be generalized to the case of colored images by considering the three RGB layers of the image separately.

Figure 1 below shows the results on the PEPPER image: going from left to right, the first two images show the high-resolution image and the blurred sub-sampled image given as input to the Super Resolution algorithms, while the third and the fourth images show the reconstructions obtained with the bicubic interpolation and with FIRENET, respectively.

Fig. 1: Super Resolution of the grayscale PEPPER image with no noise in the measurements. From left to right: ground thruth; Input; Bicubic; FIRENET.

This first test considers noiseless measurements (e = 0), and by the visual analysis it is clear that FIRENET algorithm is more accurate in recovering the ground truth image with respect to the bicubic interpolation. Indeed, the FIRENET-reconstructed image appears definitely less blurred and more resoluted than both the input and the image enhanced through bicubic interpolation.

This claim remains true in the noisy case, as shown by figures 2 and 3. Here, we applied to the input image some random noise, with two different magnitudes, of approximately 1% and 2%. This noise represents a random error in the measurement method, such as the camera used to capture the image.

A notable feature of this model is its relatively low computational time: the Super Resolution processing of these 128-by-128 pixel low-resolution images takes approximately 9 seconds. This key property led us to verify whether FIRENET could be used as a pre-processing procedure for other imaging problems, such as image classification problems, on low-resolution images.

Fig. 2: SR of the grayscale PEPPER image with 1% noise

Fig. 3: SR of the grayscale PEPPER image with 2% noise

Therefore, we developed a deep learning model for image classification, and then tested it on the high-resolution, the blurred sub-sampled and the FIRENET super-resolved versions. For this last analysis, we considered a binary classification problem on the “Dog-Cat” dataset and we used a convolutional neural network trained on the high-resolution images as the classification algorithm. When tested on the high-resolution images, the trained model achieves 91% of accuracy and this score drops to 65% when tested on the low-resolution (artificially) corrupted version of the same images. However, when testing the model on the super-resolved versions of the images, we obtain 86% of accuracy. These results confirm the fact that FIRENET is able to recover features and details of the high-resolution images from their corrupted version and they provide an additional measure to quantify the increase in resolution in terms of the behaviour of an automated trained algorithm. The curios reader can see this more technical overview about the construction of FIRENET and the underlying theory.

REFERENCES

This blog post has been obtained from the master’s thesis of Alessandro Alberti: Stability theory of Neural Networks for inverse problem with an application to Image Super Resolution (supervisors prof. Romito from University of Pisa and dr. Dubbini from Miningful).

[1] Chambolle, Antonin and Pock, Thomas, A First-Order Primal-Dual Algorithm for Convex Problems with Applications to Imaging, Journal of Mathematical Imaging and Vision vol.40, pp. 120-145 (2010).

[2] Colbrook, Matthew J. and Antun, Vegard and Hansen, Anders C., The difficulty of computing stable and accurate neural networks: On the barriers of deep learning and Smale’s 18th problem, Proceedings of the National Academy of Sciences, vol. 119 (2022).